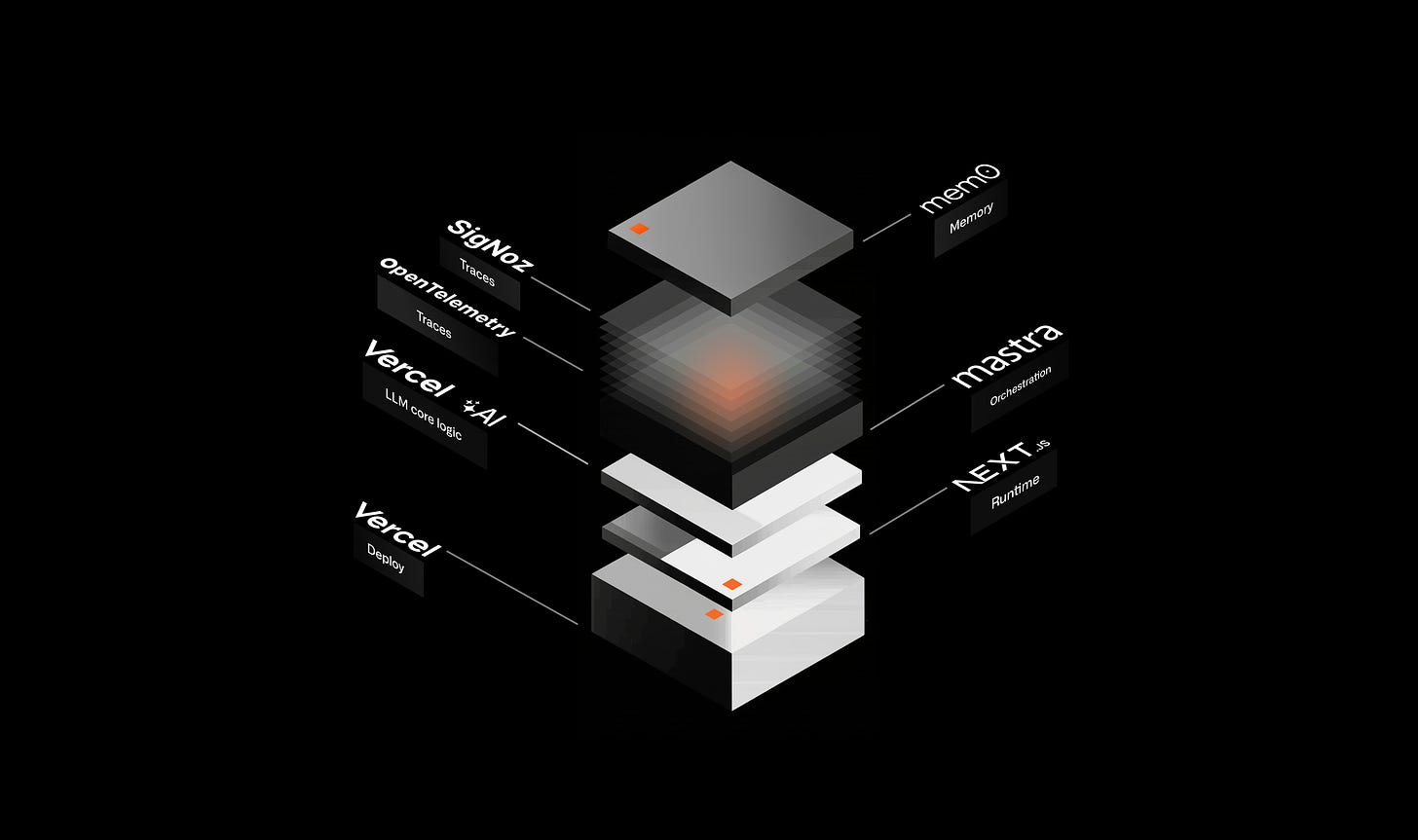

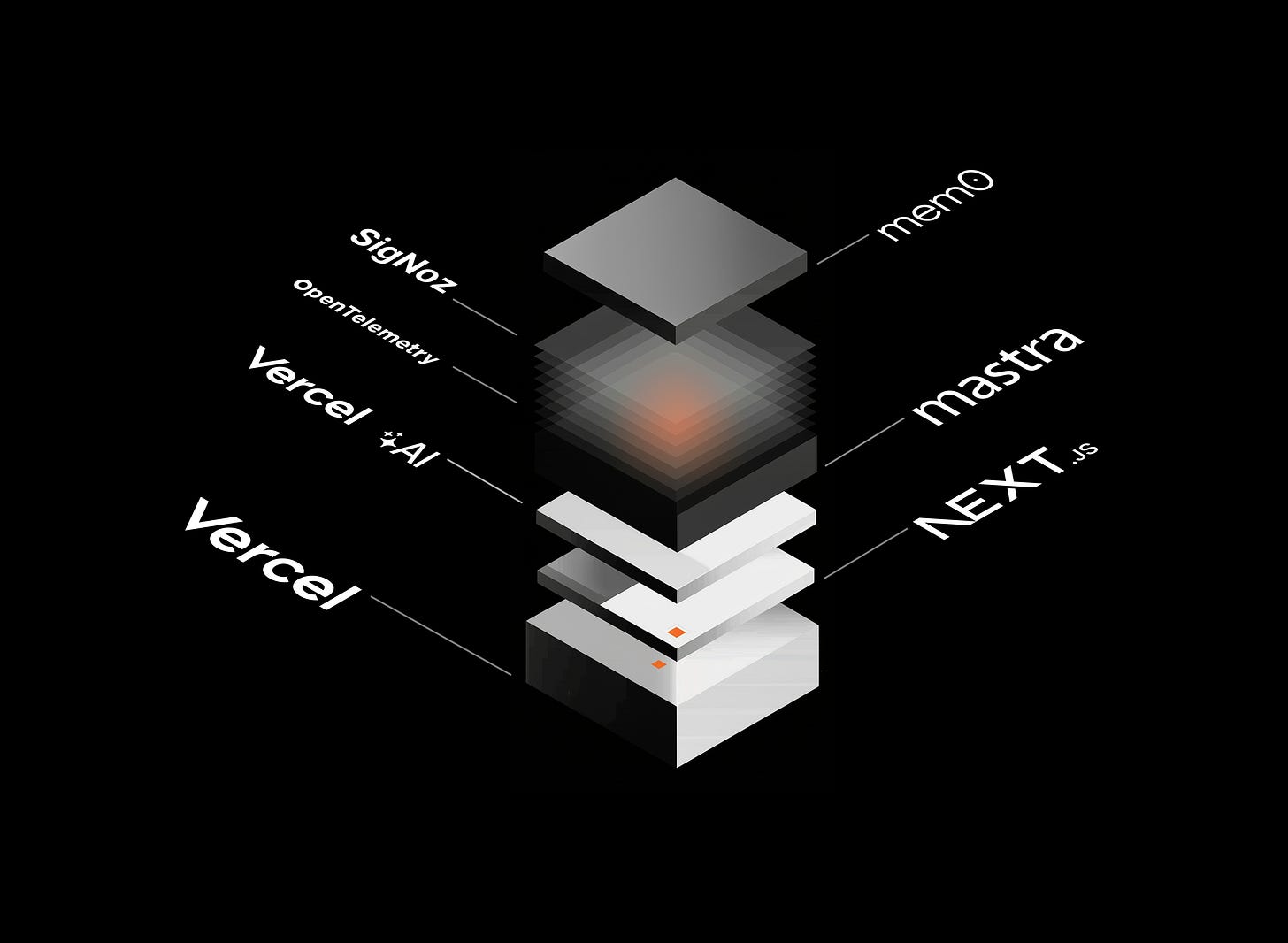

MY PRODUCTION-READY AI AGENT STACK

100% TypeScript Stack for Building Production-Ready AI Agents

After 16 months of building AI agents I finally have a production stack where everything just works together.

Here's what I'm using:

NEXT.js (runtime)

Vercel AI SDK (LLM core logic)

Mastra (agentic framework)

AI Elements (UI components)

Mem0 (memory layer)

OpenTelemetry & SigNoz (traces)

Vercel (deploy)

It's perfect!

No duct tape. No clunky integrations. Deploys in seconds.

Full-stack TypeScript. One deployment.

Let’s unpack it and I show you why it's so good.

But first, some background…

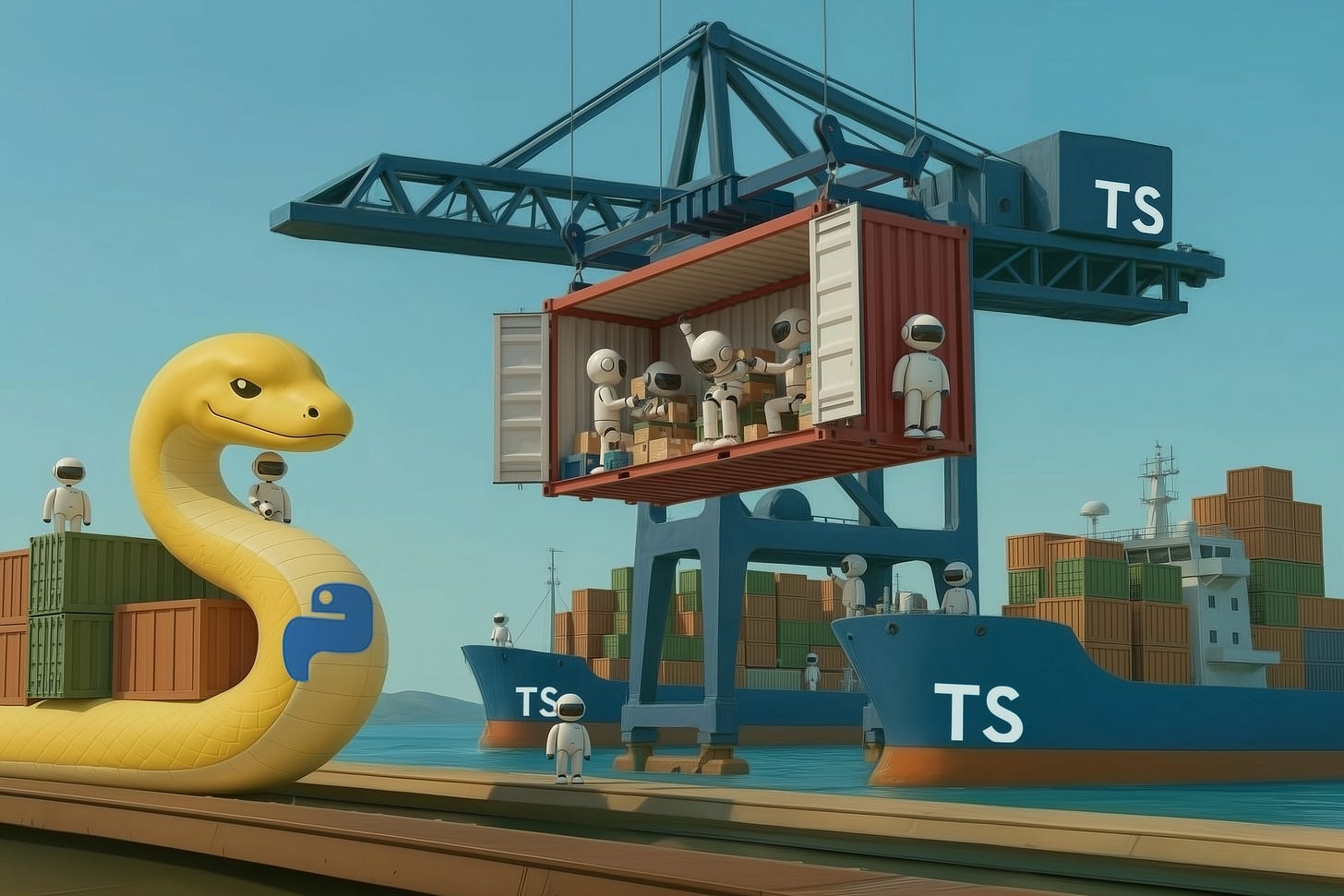

Python vs TypeScript

When you're entering the AI agent dev space, your first big decision is Python vs TypeScript/JavaScript.

Historically Python dominated AI/ML. Most early agent frameworks (LangChain, CrewAI, AutoGen) built on Python.

Today there are quite a few amazing agentic frameworks in Python ecosystem.

My favorites: Google ADK, Agno. You can build powerful agents with those.

Yet Python has a problem: UI.

When you need a modern React-based web UI you're going to need to integrate two stacks: Python for the AI core + JavaScript for the frontend.

Two languages, two ecosystems, two deployments. Workable, not ideal.

But here's the good news: the TypeScript AI ecosystem really caught up!

And with incredible ▲ Vercel leadership it's starting to dominate in builders community.

You can think of it this way:

Python → best for delivering models: data, training, inference, ML ops

Typescript → ideal to ship products: UI/UX, business logic, orchestration, scaling

My entire stack runs on TypeScript and relies heavily on Vercel Ecosystem.

Let me walk you through every component of it.

The Breakdown

NEXT.js: Runtime

NEXT is not just a React framework [this common misconception still exists]

It's the backbone of the entire app!

It handles:

Agent runtime

API routes (backend <> frontend)

Frontend logic & rendering

UI/UX

NEXT.js provides a super neat architecture:

your AI logic, API routes, and UI all live in the same codebase.

No need to jump between separate backend and frontend repos.

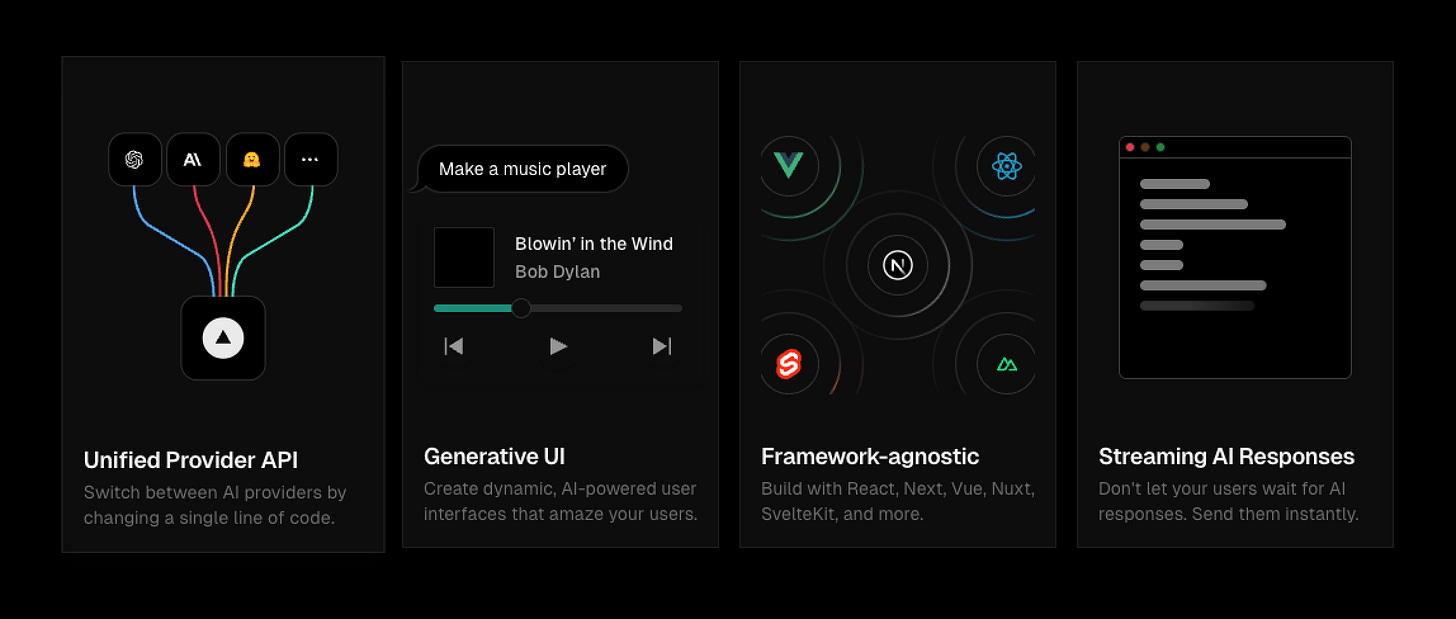

Vercel AI SDK: LLM Scaffolding

A TypeScript SDK for LLM interaction & logic.

It’s a layer of abstraction that provides: streaming, tool calls, structured outputs, UI hooks.

Key benefits:

Vercel native [yet works with other framework too]

Type safety: 100% TypeScript support

LLM agnostic: You can use OpenAI, Anthropic, Google, Groq and more

Great developer experience: Super clean API + amazing documentation

AI SDK is the foundation agentic frameworks build on.

BTW AI SDK is evolving to include more agentic features itself.

Mastra: Orchestration

While AI SDK handles LLM logic beautifully, agents need orchestration.

Here's where Mastra comes in!

Mastra is an open-source agentic TypeScript framework built on top of AI SDK.

Think of it this way:

AI SDK = scaffolding for LLMs

Mastra = scaffolding for Agents

Mastra provides the core AGENT abstraction:

export const simpleAgent = new Agent({

name: “Simple Agent”,

instructions: “You are a helpful assistant. You can use the simpleTool to do X ...”,

model: openai(”gpt-5-nano”),

memory: new Memory(),

tools: {

simpleTool,

},

});As you can see It handles:

System prompt

Model selection

Tool calling

Memory

Using these, you can create really complex agentic behavior.

And this is what many people are missing:

building agents is 50% tech and 50% behavior engineering.

Mastra gives you an amazing PRO toolkit to handle both.

It includes:

Memory curation

Runtime control

Multi-agent wiring

Using these, you can orchestrate multiple agents into a very sophisticated AI system.

And this system needs a UI, right?

AI Elements: UI

AI Elements gives you production-ready UI components out of the box.

Built with shadcn, Radix and Tailwind:

Chat flow

Output renderer

Loaders / controls / selectors

Error handling

You can build a ChatGPT-like interface in no time:

All fully customizable: you can skip frontend wiring & UI nuances, and focus on your agent logic & UX.

Mem0: Long-Term Memory

One more quick background snapshot:

The biggest shift happening in AI right now: STATELESS → STATEFUL

We're used to stateless AI that:

Forgets previous interactions

Starts from scratch every time

Feels inconsistent and robotic

Sure, we've had RAG for a while, but retrieval ≠ memory.

RAG-enabled agents still suffer from amnesia.

True memory changes everything:

Quick recall from multiple interactions

Better reasoning through context awareness

Self-improvement over time

Personalization and trust

And yes, Mastra already handles a lot of memory features (working memory, conversation memory, semantic recall).

But I like my agents to be truly personal and sharp!

I need them to be peaky: to remember specific facts across threads.

To hold all important user preferences and decisions.

This is where Mem0 comes in: it gives agents a persistent, searchable & manageable memory layer.

And it gives you another orchestration and behavior engineering tool.

Mem0 works well with Mastra and can be integrated with just a few lines of code.

But of course with each additional library your agent is becoming more complex and error-prone…

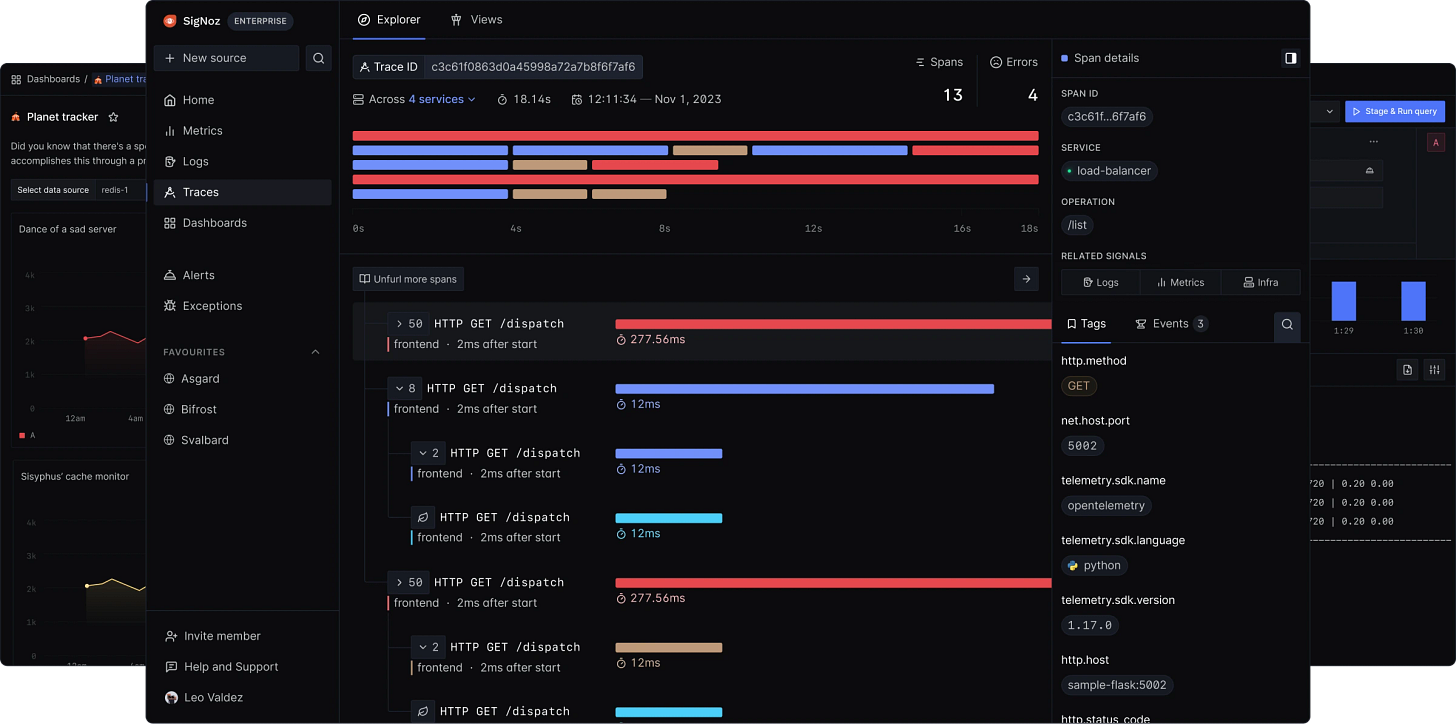

OpenTelemetry + SigNoz: Traces

Complexity is inevitable in real-life agentic systems.

When your agent performs a sophisticated non-linear task, it might involve dozens of LLM calls and tool executions.

You need to see what's happening there:

track errors and find non-optimal flows.

OpenTelemetry enables your agent to produce traces.

In SigNoz you can query them and visualize.

You can trace:

Tool calls

Latency & token usage

Bottlenecks & failures

Mastra already has OpenTelemetry integrated! You just need to set up SigNoz routing.

This is mandatory for production:

when your agent goes off the rails at 3 AM, you need those traces.

Vercel: Deployment

NEXT.js → Vercel deployment is brilliant!

The entire app deploys with one command.

No separate Docker configs. No complex DevOps pipelines.

Just

git pushThat's it!

Vercel handles:

Minimal-config serverless deploy

Continuous integration

Automatic scaling

And so much more!

Why This Stack Works

So after trying dozens of combinations, this stack is a clear winner for me.

Everything is TypeScript. Every component understands the others.

Mastra is built on AI SDK, has integrated OpenTelemetry and native support for Mem0.

NEXT.js, AI SDK, AI Elements are all parts of Vercel ecosystem.

They all click together like LEGO blocks.

No glue code. Great developer experience. Minimalistic API.

Full observability. One command deploy.

16 months of experiments, failed integrations & all-night debugging sessions, but I finally found it: my perfect agent stack!

Good stuff, thanks for sharing Andrew 🙏 any updates since posting? What use cases have you developed these agents for?

Excellent work, Andrew. Do you have a working demo and a rough estimate of the implementation cost? What are the annual running costs? Thanks, Lee.