MCP FOR EXPERTISE DISTRIBUTION

Complete Breakdown: How I Built an MCP Server So Agents Can Operate My Method

In this guide I’ll show you step-by-step how I built MCP Server for Advanced Knowledge Systems.

Using stack:

▲ Vercel [ecosystem]

NEXT.js App Router [backend]

Vercel MCP‑handler [MCP wiring]

MongoDB [database + vector index]

MUX [video storage & transcripts]

OpenAI embeddings‑3‑small [vector embeddings]

Claude API [transcript enhancement]

LangChain text splitters [chunking]

Clerk [auth]

First let me give you some context.

BACKGROUND

2 years ago I created the course: Advanced Knowledge Systems.

The essence of the course is summarized in the DIKW framework.

I teach people how to build a personal knowledge system that:

Process DATA

Organize INFORMATION

Extract & codify KNOWLEDGE

Facilitate WISDOM

In just a few days I’ll be running the 14th cohort.

And with each new cohort, this paradigm shift became more and more evident:

FROM: we build the system ourselves

TO: we use agents to build the system for us

This is huge!

It influenced not just how we BUILD but also how I share my know-hows & expertise with cohort participants.

We’re entering a new era of expertise sharing.

It forced me to rethink the ideas of knowledge sharing and expertise from first principles.

Think about it: Until now, knowledge moved primarily from person → person (or person → group).

Now there’s a new stakeholder: AI agent.

Agents like Claude Code, Codex, Cursor, Warp... they changed everything.

I would argue that they are the smartest and most capable tools we ever had

[Like ever… as collective humanity… in any domain]

They are brilliant!

They don’t just write code; they set up environments, manage terminals, connect tools, observe other apps and DO so much more!

This is the crucial part:

For them to be most efficient, they need context: domain-specific knowledge.

And not in the form of static docs & fixed guidelines.

They clutter the context and get outdated very fast.

But knowledge in the form of live, updatable, granular, queryable & searchable knowledge bases with versioning & attribution.

MCPs

This is where MCPs come into play.

What Is an MCP? (Quick Primer)

MCP = Model Context Protocol

Think of it as a smart API for agents:

It’s a standardized server interface that exposes:

Knowledge (facts & frameworks)

Tools (functions & procedures)

Instructions (how to make those useful)

MCP = Toolkit for agents + Guidelines for using itMCP turns expert methods into interactive toolkit.

Using this toolkit agents can learn, plan, and act through actively engaging with specific know-hows.

Knowledge is a kind of information that’s true and useful

— David Deutsch

MCPs make knowledge operational!

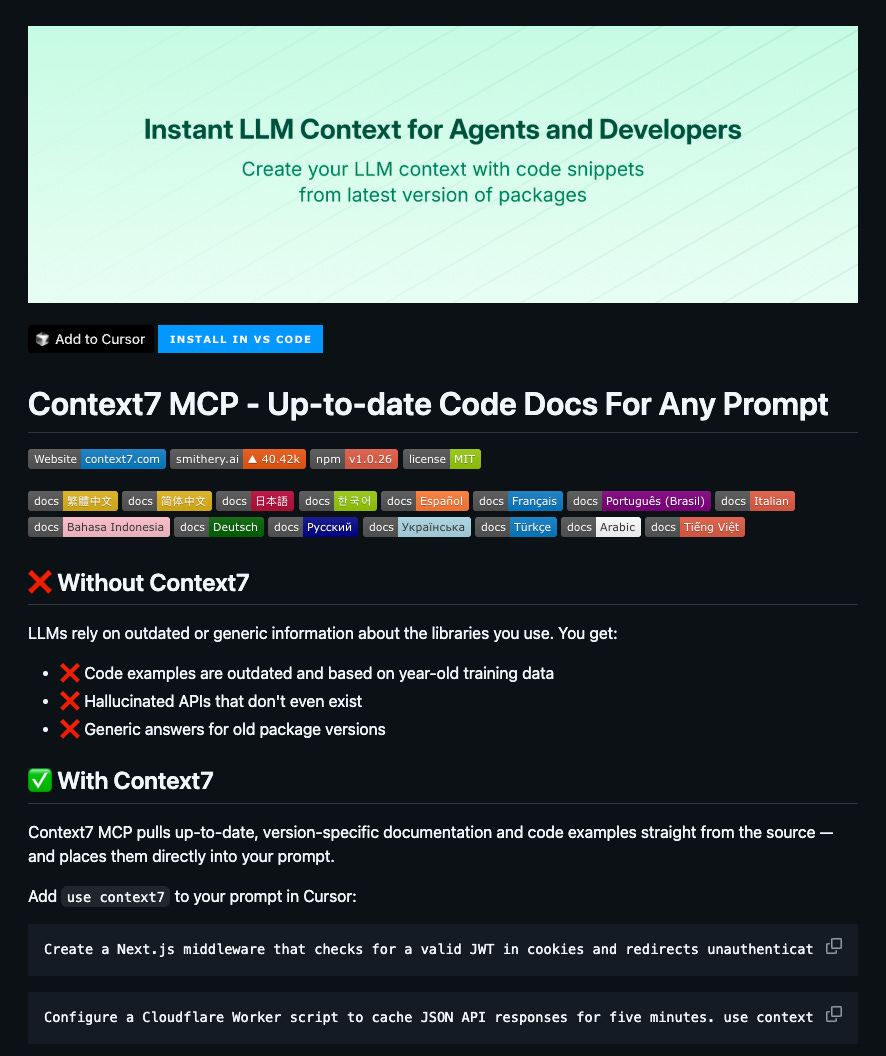

The perfect example here:

Context7

Context7 MCP integrates up-to-date documentation for hundreds of libraries.

Agents can pull fresh knowledge instantly. It’s always up to date and useful.

With Context7 agents don’t rely on stale knowledge from the model weights.

And you do not have to worry that they import outdated libraries or build for non-existent APIs.

Inspired by Context7 [I use it ALL THE TIME] I decided to build my own MCP server following the same paradigm.

An MCP server that acts as a gateway to my methodology, frameworks, and know-hows.

FOR PEOPLE: it provides the way to interact, search, and learn.

FOR AGENTS: it gives the instructions & tools to build working systems.

Now let me show you how I did it.

BREAKDOWN

MVP

Of course I had a grand vision for the project, but I wanted to build the prototype fast.

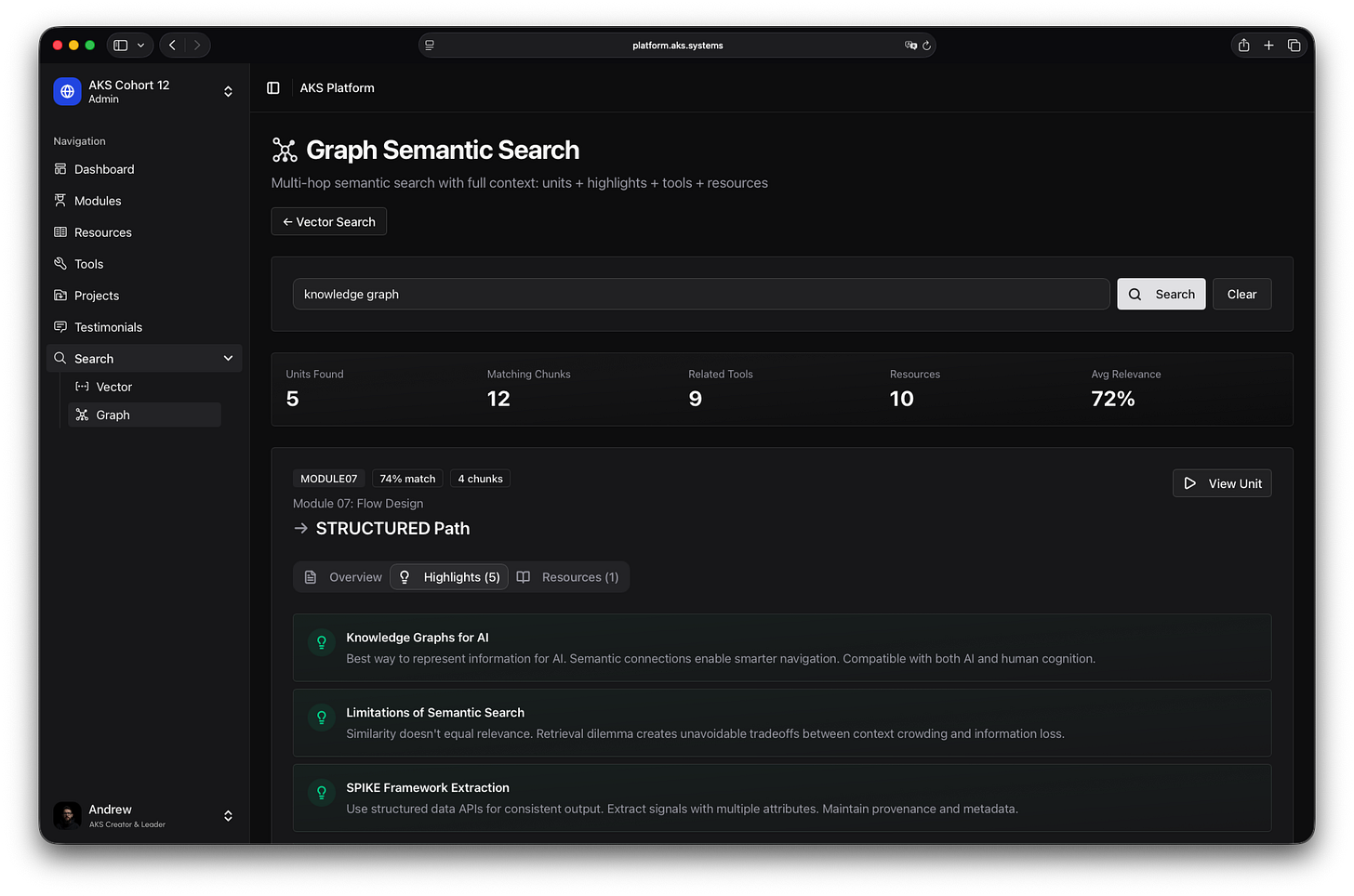

So now my MCP server exposes 2 core capabilities:

Semantic Search

Fast vector search on course content: lectures, resources, frameworks.

Graph Search

Multi-hop exploration through connected entities: concepts, highlights, resources & tools.

Multi-hop means: when semantic search finds a specific chunk → it can hop to the unit from which this fragment came from [hop 1] → and from the unit to connected resources/highlights/tools [hop 2]Cohort participants can connect the MCP to any agent and get a companion to the course.

Stack

I started with what I already had:

Course platform built on NEXT.js

MongoDB with modules, units, resources, projects

Clerk auth

For maximum reusability I wanted to build the MCP on top of my existing platform infrastructure: Same backend. Same auth. Same data. Minimal duplication.

Here is the architecture I came up with:

Architecture

AGENT

↓↑

MCP Server

↓↑

NEXT.js (App Router) | ▲ Vercel

↓↑

Search Libraries [based on Mongo best practices]

↓↑

MongoDB Atlas

├─ units/resources/highlights/tools [traditional collections]

└─ embeddings [collection with vector index]

↑

PIPELINE: chunking ← enhancement ← transcripts

↑

MUX (video)

Let’s explore all components and logic behind each.

Database

I already use Mongo as the main database for the course platform.

And I wanted to keep all course data in one place:

One system for units, resources, projects, and embeddings

Vector index for semantic search

Native lookups and graph‑style hops

Before this project I knew Mongo’s RAG capabilities were quite good, but I discovered that it can do way more!

In Mongo you can implement:

Vector search for semantic retrieval

Graph multi-hop traversal for better reasoning

They enable both traditional RAG and GraphRAG [without federating data across multiple databases].

Storing vector embeddings in Mongo is super easy:

You just need to create a vector index.

Your coding agent can easily do it for you😉

Graph is a bit more complicated: Mongo is not a graph database.

It’s a document database.

That means I had to implement graph traversal on top of this paradigm.

Fortunately, they have this amazing repo for developers with all the examples.

First I copied their Graph RAG approach.

Then I quickly scaffold a simpler dataset for tests & evals → it worked flawlessly!

Now my DB is optimized for both Web App (platform) and RAG:

Traditional document storage

Vector storage + vector index

Schema aligned with multi-hop graph retrieval pattern

Next step: content pipeline to make my course videos accessible for LLMs:

video → transcripts → enhancement → chunking → embeddings → ingestion

Pipeline

I’m using MUX as my video platform. It’s pretty amazing!

Essentially, it’s developer-first video hosting.

It allows you to access all video assets (including transcripts) programmatically.

So I quickly built a content pipeline:

┌─────────────┐

│ MUX Video │

│ (platform) │

└──────┬──────┘

│

V

┌─────────────────────┐

│ Fetch Transcript │

│ (programmatically) │

└──────┬──────────────┘

│

V

┌─────────────────────┐

│ Enhance with LLM │

│ Claude API │

│ [clean, denoise, │

│ normalize] │

└──────┬──────────────┘

│

V

┌─────────────────────┐

│ Chunk Text │

│ LangChain splitters │

└──────┬──────────────┘

│

V

┌─────────────────────┐

│ Generate Embeddings │

│ OpenAI embed-3-small│

└──────┬──────────────┘

│

V

┌─────────────────────────────────┐

│ Upsert to MongoDB │

│ ├─ embeddings collection │

│ │ [with vector index] │

│ └─ link with units + metadata │

└─────────────────────────────────┘I was able to vectorize and ingest all the course content in just an hour!

Units were already connected to resources, tools, and highlights. So now everything lives in Mongo: clean, queryable, and fast.

With all the data in Mongo, time to move to APIs.

NEXT.js (API for Platform and MCP)

NEXT is not just a React framework [this common misconception still exists]

It’s the backbone of the entire system!

NEXT provides a super neat architecture:

your business logic, API routes, and UI all live in the same codebase.

No need to jump between separate backend and frontend repos.

Since I wanted to have all MCP capabilities on the platform too, I’ve started there:

I integrated the search tools directly into the course platform app (NEXT.js, App Router) so people could use them to navigate the cohort.

Then I extracted the logic into clean libraries and a simple pattern:

Library + interface + API endpoint

So the course platform and MCP server could share:

Same DB connections

Same auth (Clerk)

Same business logic (search libraries)

Same API infrastructure (App Router)

This way one backend can power both:

The web platform (for students)

The MCP server (for agents)

Both are enabled to:

Access all course content via semantic search

Hop across related units/resources/tools

Pull supporting materials (examples, checklists, frameworks)

Get structured context in JSON

Reusability is the huge win here! Full-stack TypeScript application using App Router. Auth is also unified: same permissions model everywhere.

So now we have everything ready! It’s time to add the MCP layer.

MCP-handler

Okay, I know I praise Vercel A LOT!

But here’s where Vercel’s ecosystem REALLY shines:

REUSABILITY & INTEGRATION

Vercel provides its own MCP-handler.

So I just wrapped two API endpoints as MCP tools and exposed them via mcp‑handler.

Like this (example from Vercel site):

import { z } from ‘zod’;

import { createMcpHandler } from ‘mcp-handler’;

const handler = createMcpHandler(

(server) => {

server.tool(

‘roll_dice’,

‘Rolls an N-sided die’,

{ sides: z.number().int().min(2) },

async ({ sides }) => {

const value = 1 + Math.floor(Math.random() * sides);

return {

content: [{ type: ‘text’, text: `🎲 You rolled a ${value}!` }],

};

},

);

},

{},

{ basePath: ‘/api’ },

);

export { handler as GET, handler as POST, handler as DELETE };That’s it. The mcp-handler handles all the MCP logic and primitives.

Now we have:

Same backend, same auth, same data [I know, I know, I’ve already said this 100 times😁]

Now callable from Claude Desktop, ChatGPT, Claude Code, Cursor, Warp…

[and others] it is compatible with pretty much any MCP client

Tools return clean, structured outputs for agents to act on

And here we are!

Let me show you it in action:

Warp agent built a framework for selecting optimal Knowledge Hub [fully aligned with with AKS approach]

Of course it will help you to apply this framework next.

IF YOU WANT TO GET ACCESS TO THIS MCP

there is a last chance to jump into AKS Cohort 14 [we have 2 spots left]

What It Enables for Learners & Builders

To summarize:

Learn‑by‑doing: run the exact procedure an expert would -> install, connect, test, iterate

Explainability: every step is documented and linked back to sources/graph nodes

Operationalization: converts inputs → outputs, following know-hows non-linearly

Acceleration: from idea to system prototype in just hours

VISION

Okay, why this is WAAAY BIGGER than just a course tool.

First I want to emphasize something:

My goal was not just make course content searchable.

But to ENCODE my methodology into something agents could OPERATE WITH.

Every search query returns not just facts & opinions → but context, connections & next steps. Every graph hop surfaces related frameworks & implementation patterns.

This isn’t a documentation.

It’s CALLABLE EXPERTISE.

And IT CHANGES everything:

If I can do this for knowledge management and system design, you can do this for your domain.

The technical pattern here is universal:

Take domain expertise

Structure it (relationships, context, provenance)

Make it queryable & actionable

Expose it through MCPs

Let agents apply it at scale

This isn’t about courses.

It’s about how expertise moves in an agent-first world.

Where MCP is becoming No 1 distribution mechanism for know-hows & best practices.

BONUS: FOR EXPERTS

What this revolution means for experts who needs channels to distribute their know-hows and frameworks?

Well, instead of publishing static PDFs or videos, you can now publish executable methods.

Your knowledge is available as a set of callable services:

Versioned and testable

Scalable to thousands of users

Applied systematically by agents

And HERE IS THE THING very few people realized yet: you don’t need to publish CODE.

You publish:

Approaches

Guidelines

Frameworks

Decision heuristics

The agent will handle the implementation! following user context and relevant aspects of your method! [for now not flawlessly, but think 6 months from now, when new generation of models is here]

And this goes waaaaay beyond tech-heavy domains.

For example:

For Nutrition Experts

Your method can be embedded into personalized nutrition assistant

Agent accesses [PRIVATELY]:

Client’s health data from wearables (metrics, activity, sleep)

Food intake patterns and logs

Parsed and mapped food photos

IoT kitchen devices & meal prep

And with your expertise MCP, it can:

Build personalized plans grounded in YOUR methodology

Deliver timely interventions based on YOUR protocols

Flag nutrient gaps and suggest substitutions from YOUR system

Adapt recommendations as data evolves

For Coaches

Your framework can become a scalable coaching companion

Agent accesses data [PRIVATELY]:

Client’s session transcripts & recordings

Journals & reflections

Progress logs & goal tracking

Assessment results (Clifton, BIG-5, ..)

And with your expert MCP, it can:

Apply YOUR coaching model to each client’s situation

Ask probing questions from YOUR methodology

Surface relevant frameworks and tools from YOUR playbook

Track growth patterns using YOUR checklists

Suggest next steps aligned with YOUR approach

For Analysts & Strategists

Your analytical frameworks become decision-support systems.

Agent accesses data [PRIVATELY]:

Client’s business metrics & KPIs

Market research & competitive intel

Strategic documents & planning artifacts

Historical decisions & outcomes

And with your expert MCP, it can:

Apply YOUR UNIQUE analytical lens to each situation

Generate scenarios using YOUR strategic frameworks

Validate reasoning against YOUR specific set of criteria

Run analyses using methods curated by YOU.

Produce reports in YOUR specific format and style

NOT generic advice.

Your specific methodology.

Applied to Client’s context.

At scale.

The pattern is the same:

MCPs turn expertise into callable procedures.

Agents apply procedures to specific context and turn them into outcomes.

We’re entering a new era of expertise sharing.

The next decade’s leverage comes from agents equipped with expert know-hows.

If you want your ideas used: DON’T WRITE A BOOK → expose them through MCP!

Okay, now it’s time to ship something🚀

If you want me to help you build the MCP system:

DM me here or enroll to Accelerated/Discovery track of AKS